User Manual Validation Sample Size Requirements: Is It Time to Reevaluate?

Introduction

According to the 2016 FDA Human Factors Guidance, Human Factors Validation Testing should include a minimum of 15 test participants from each intended user group1. This sample size has been debated, as some experts suggest that fewer than 15 test participants may be needed to achieve similar results. UserWise collected data from several usability studies where the user documentation was evaluated, to gain insight on what the optimal number of test participants is to uncover all use errors when specifically testing comprehension and understandability of written instructions.

User Manual Comprehension Testing

During simulated use testing, participants have the option to refer to the instructions if they choose to but should not be requested to use the instructions. As a result, participants may not necessarily refer to the instructions when completing a task during the simulated use portion of usability testing. Therefore, in order to evaluate the effectiveness of user documentation as a risk control measure, test participants may also be asked questions in addition to being observed, after simulated use testing is completed. UserWise refers to the evaluation of the user documentation as “User Manual Comprehension Testing.”

Virzi (1992) and Nielsen (1993) vs Faulkner (2003)

Authors Robert A. Virzi and Jakob Nielsen published research on the topic of defining the optimal sample size to uncover usability problems in usability testing, with the aim to improve user interface design and reduce testing costs.2 3 Their research suggests that 5 participants may be a sufficient sample size for most usability testing because the nature of usability research is qualitative rather than quantitative. Thus, traditional empirical statistical analysis is not necessary to adequately assess the results of usability testing. Virzi argues that 4 to 5 participants is an adequate sample size to detect approximately 80% of usability problems, and that including additional subjects are increasingly less likely to reveal any new information. Furthermore, Virzi claims that the most pervasive usability problems are likely to be detected by the first several participants.

Neilsen’s support of the 5-user assumption is based on retroactive mathematical calculations of use error rates on data collected from 13 studies. When predicting the confidence intervals of his results, Neilsen used a z distribution, which is suitable for large sample sizes, as opposed to the t distribution, which is suitable for small sample sizes. This assumption may inflate the power of his predictions.4

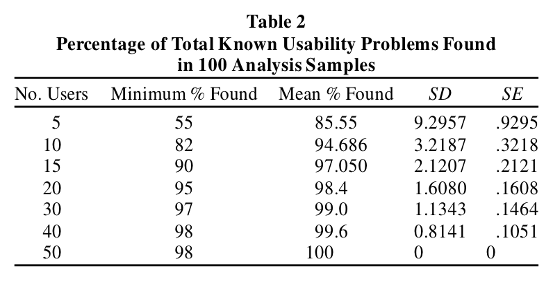

While Virzi and Neilsen’s findings helped usability professionals struggling with limited budgets to justify their projects, some usability experts questioned if the 5-user assumption was appropriate and transferable to all work in the field. In particular, Laura Faulkner challenged the 5-user assumption by calculating the average percentage of usability problems uncovered with different sample sizes. In her study design, a simple computer task was designed and given to 60 participants with different levels of skill and experience. Faulkner then randomly sampled users into different sample sizes and calculated the average percentage of problems identified for each sample size. In agreement with Virzi and Nielsen, Faulkner found that the average percentage of usability problems found with 5 users was approximately 85%. However, with 5 users, the percentage varied from 55% to nearly 100%, meaning that with any set of 5 users, almost half of the identified problems could have been missed.4

Figure 1. Summary

of Faulkner's findings of usability problems found by varied participant sample

sizes (Faulkner, 2003).

Figure 1. Summary

of Faulkner's findings of usability problems found by varied participant sample

sizes (Faulkner, 2003).

Faulkner concluded that adding more participants to the sample size increased the probability of identifying all usability problems. Furthermore, adding more participants increased the minimum percentage of usability problems found. While 5 participants can indeed uncover 85% of usability problems, there is a risk of missing a significant number of usability problems, which can have serious implications especially if the missed problems have the potential for serious harm, in the context of medical devices.4

Figure 2. The

effect of adding users on reducing variance in the percentage of known

usability problems found. Each point represents a single set of randomly

sampled users. The horizontal lines show the mean for each group of 100

(Faulkner, 2003).

Figure 2. The

effect of adding users on reducing variance in the percentage of known

usability problems found. Each point represents a single set of randomly

sampled users. The horizontal lines show the mean for each group of 100

(Faulkner, 2003).Data Analysis

UserWise analyzed User Manual Comprehension task data collected from the User Manual Comprehension portion of usability studies for 2 different medical devices. The goal was to determine the optimal sample size per user group for User Manual Comprehension tasks, using Faulkner’s study design as a model.

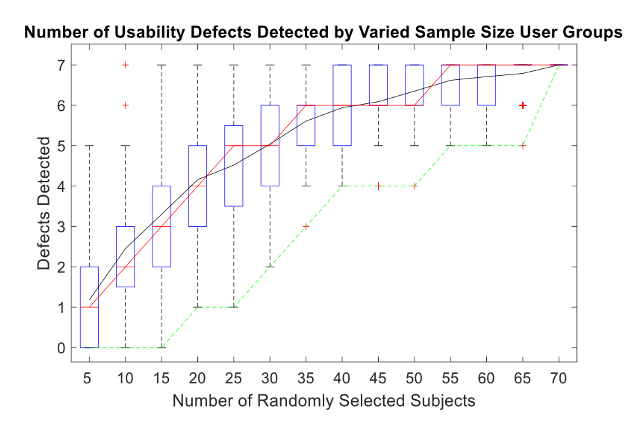

The first study examined was usability study of lay participants using a diagnostic breath measurement product. UserWise recruited 70 lay user participants who had limited to no training or prior experience using this kind of device. During the Human Factors Validation Study, 7 usability problems regarding the User Manual were uncovered.

Similar to the Faulkner study, the author wrote a MATLAB code to draw random participant results from the total population of 70 participants. The program ran 100 trials for each sample size (i.e., 5, 10, …, 70). The results are shown in a boxplot where the red, black, and green trendlines indicate the median, mean, and minimums of each sample size, respectively. The blue boxes represent the middle 50% of all 100 trials for each sample size increment and the whiskers extend to the upper and lower quartiles of the dataset. Finally, the red crosshairs represent outlier groups. This data is summarized in Figure 3.

Figure 3. Diagnostic breath measurement product usability defects detected by

varied sample size user groups.

Figure 3. Diagnostic breath measurement product usability defects detected by

varied sample size user groups.As shown in Figure 3, for 50% of the data points collected with 5 participants, only 0 to 2 out of the 7 defects were detected. Therefore, the likelihood of uncovering all usability problems using a sample size of 5 participants for this particular device is improbable.

When the sample size was increased to 10 participants, 1 outlier group successfully identified all 7 defects, indicating that a sample size of 10 users could potentially find all the defects, but the likelihood in which this event may happen is quite improbable.

However, when the sample size was increased to 15 participants, all defects were identified within the upper quartile range. Therefore, 15 participants may be the optimal sample size for this study because it is the smallest number of participants that is capable of detecting all usability defects. According to this assumption, testing 15 out of 70 participants for User Manual Comprehension tasks for this study would have provided sufficient results for detecting all usability problems related to user documentation.

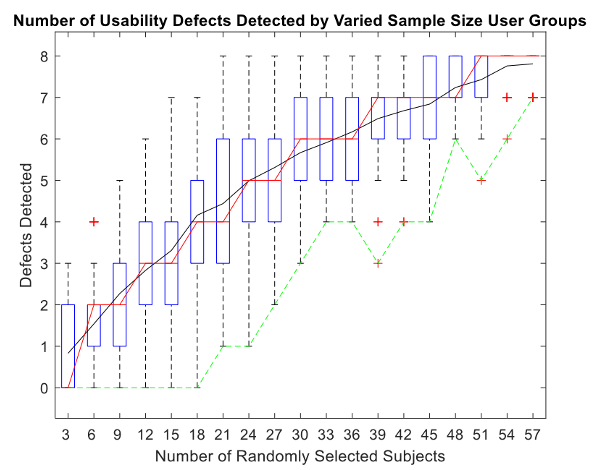

The second device examined was an injection pen. UserWise recruited 61 lay user participants from 2 user groups, Caregivers and People with Parkinson’s Disease with and without prior experience using injection pens. During the study, 8 usability problems were uncovered regarding the User Manual.

The same MATLAB analysis described for the diagnostic product was also conducted for the injection pen study. The boxplot of results is summarized in Figure 4.

Figure 4. Injection pen usability defects detected by varied sample size user

groups.

Figure 4. Injection pen usability defects detected by varied sample size user

groups.Using the same logic as described with the diagnostic product, the smallest sample size able to identify all 8 defects was 21 participants. Notably, however, the maximum number of defects identified was 8 while the minimum number found was 1 defect.

For 50% of the data points collected with a sample size of 33 participants, 5 to 7 defects were detected. This suggests that for this device, choosing a sample size of 30 participants produces a higher probability of identifying at least 6 of the 8 defects. However, increasing the number of participants beyond 30 indicates a trend of diminishing return on investment. According to this assumption, testing half of the total number of participants (i.e., 30 out of 61 participants) for User Manual Comprehension tasks for this study would have provided sufficient results for detecting most, if not all, usability problems related to user documentation.

Further analysis of the individual participant results indicates that 3 of the usability problems were only detected by 1 participant each. Thus, only when these 3 participants were randomly selected to be included in the sample were all usability problems uncovered. Two (2) of the 3 participants were People with Parkinson’s Disease and the third was a caregiver. Their previous experience with the product and the training they received during this usability study varied, as shown in Table 1.

Table 1. Participant Previous Experience and Training

![]()

The results suggest that selecting a subset of multilevel (i.e., varied previous experience, training, user groups) is imperative for uncovering all usability problems with the User Manual. If only People with Parkinson’s Disease participants were evaluated, 3 usability problems identified exclusively by Caregivers would not have been uncovered.

A diverse subset of participants would be more likely to identify unforeseen use issues with user documentation. Rather than testing all participants on User Manual Comprehension tasks during usability testing, researchers could instead identify a subset of multilevel participants to assess all user documentation tasks. These participants could be randomly selected based on their responses to inclusion screening prior to the initiation of testing. In turn, reducing the sample size for user documentation testing would align with Virzi’s and Neilsen’s aims to justify the 5-user assumption and limit the financial burden of usability testing. Furthermore, pre-identifying a diverse, multilevel subset would minimize the risk of failing to uncover usability issues and align with Faulkner’s initiative to conduct usability tests with larger samples of multilevel users. Pairing the sample size approaches proposed by Virzi and Neilsen and Faulkner constitutes an optimal middle ground for conducting User Manual Comprehension testing.

Conclusion

Conducting Human Factors testing with fewer test participants can save time, energy, and money; however, when dealing with medical devices that have the potential to cause serious harm, safety and usability must remain the main priorities. The FDA states that manufacturers should make their own determinations of the necessary number of test participants to include in Human Factors Validation Testing, but that a minimum number of 15 participants should be included for each user population. However, the FDA also notes that the recommended minimum could be higher for specific device types.1

Despite the 5-user model presented by Virzi and Nielsen, it is evident from the case studies conducted by UserWise that a sample size of 5 test participants is not always sufficient to confidently capture all usability problems with user documentation. Additionally, the current FDA requirement of 15 participants per user group proposed by Faulker has not been modified by the Human Factors community since the draft FDA guidance for Human Factors was initially published in 2011. While 5-users may theoretically be an ideal sample size to detect most common usability problems, the optimal sample size for uncovering usability problems and minimizing financial burden varies based on the occurrence of the usability issue, the complexity of the device, and the aptitude of the participants. UserWise recommends further investigation of historical usability study data, as performed in these the diagnostic product and injection pen case studies, to determine the optimal sample size for evaluating User Manual Comprehension.

Next Steps

If you need Human Factors Validation data for your medical product, UserWise is here to help! We can assist you with identifying and examining each critical task, evaluating the device User Interface (including the User Manual), and then presenting your case to the FDA or other regulatory body. Whether you need help conducting in-person usability testing, executing remote usability testing, or performing an expert review of your product, we can quickly assist you to move your medical innovation forward.

Contact us today to set up a free 1 hour consult for your project or learn more about our usability engineering services and expertise. Also, check out the blog posts below with helpful resources for medical product development.

References

1. FDA Guidance: Applying Human Factors and Usability Engineering to Medical Devices (2016)

2. Virzi, R.A. (1992). Refining the rest phase of usability evaluation: How many subjects is enough? Human Factors, 34, 457-468.

3. Nielsen, J. (1993). Usability Engineering. Boston: AP Professional.

4. Faulkner, L. (2003). Beyond the five-user assumption: Benefits of increased sample sizes in usability testing. Behavior Research Methods, Instruments, and Computers, 35(3), 379-383.

︎ Savannah Esteve, Alec Portelli | February 3, 2021

Related Posts